Planetscale Operator vs Vitess Orchestrator

Planetscale Vitess Operator bir cluster'ı istenilen state/duruma getirir. Örneğin 3 tane tabletim olsun dersek operator 3 tane tabletin sürekli çalışmasını sağlar. Ancak tabletler halen komut satırından yönetmek gerekiyor. Örneğin reparenting işlemi yapmak veya tabletleri izlemek istiyorsak bunu komut satırından yapmak gerekiyor.

Vitess Orchestrator tabletleri yönetme işini kolaylaştırıyor. Bize sağladığı kullanıcı arayüzüyle (GUI) hem tabletleri kolayca izleyebiliyoruz, hem de sürükle bırak ile tabletleri yönetebiliyoruz.

Vitess Orchestrator Nereden Geliyor

Açıklaması şöyle

vtorc is a fork of the Orchestrator customized to run as a Vitess component.

Github bağlantısı : https://github.com/openark/orchestrator

Orchestrator tarafından kullanılan sorgular orchestrator.conf.json dosyasında

GCP üzerinde şöyle yaptım. Bağlanacak port adresine "kubectl get svc" ile bakmak gerekiyor. Böylece "http://172.18.17.99:30055/" ile bağlandım

kubectl expose pod adv-vitess-cluster-adv-x-x-az1-vtorc-c0df5135-64b9dd7f97-k9jt2 --port=3000 --target-port=3000 --type=NodePort --name vtorc

Ayarlar

Bir örnek burada

Örnek

Şöyle yaparız. secret'ın ismi example-cluster-config. Bunun içindeki orc_config.json bölümünde ayarlar varapiVersion: planetscale.com/v2

kind: VitessCluster

metadata:

name: {{ $.Values.keyspaceName }}-vitess-cluster

spec:

images:

...

backup:

...

globalLockserver:

...

cells:

...

vitessDashboard:

...

keyspaces:

...

vitessOrchestrator:

configSecret:

name: example-cluster-config

key: orc_config.json

resources:

limits:

memory: 128Mi

requests:

cpu: 100m

memory: 128Mi

...Ayrıca secret.yaml dosyasındaki Orchestrator kullanıcısını etkinleştirmek gerekiyor. Yani şöyle oluyor

stringData:..init_db.sql: |...# User for Orchestrator (https://github.com/openark/orchestrator).# TODO: Reenable when the password is randomly generated.CREATE USER 'orc_client_user'@'%' IDENTIFIED BY 'orc_client_user_password';GRANT SUPER, PROCESS, REPLICATION SLAVE, RELOADON *.* TO 'orc_client_user'@'%';GRANT SELECTON _vt.* TO 'orc_client_user'@'%';FLUSH PRIVILEGES;RESET SLAVE ALL;RESET MASTER;orc_config.json: |{"Debug": true,"Durability": "none","MySQLTopologyUser": "orc_client_user","MySQLTopologyPassword": "orc_client_user_password","MySQLReplicaUser": "vt_repl","MySQLReplicaPassword": "","RecoveryPeriodBlockSeconds": 5}

Giriş Ekranı

Expose için öyle yaparız

kubectl expose pod adv-vitess-cluster-adv-x-x-az1-vtorc-c0df5135-69cd844c44-kf6zq --port=3000 --target-port=3000 --type=NodePort --name vtorc -n rlwy-07

Worker node'lardan birisnin IP adresine şöyle bağlanırız.

http://172.18.17.115:31919/

Giriş ekranı şöyle

Eğer hata varsa şöyle.

Availability zone olan ADV'nin içi şöyle

Bir başka şekil şöyle. Buradaki siyah başlık ne anlama geliyor bilmiyorum

IP adresi yerine alias kullanılabilir.

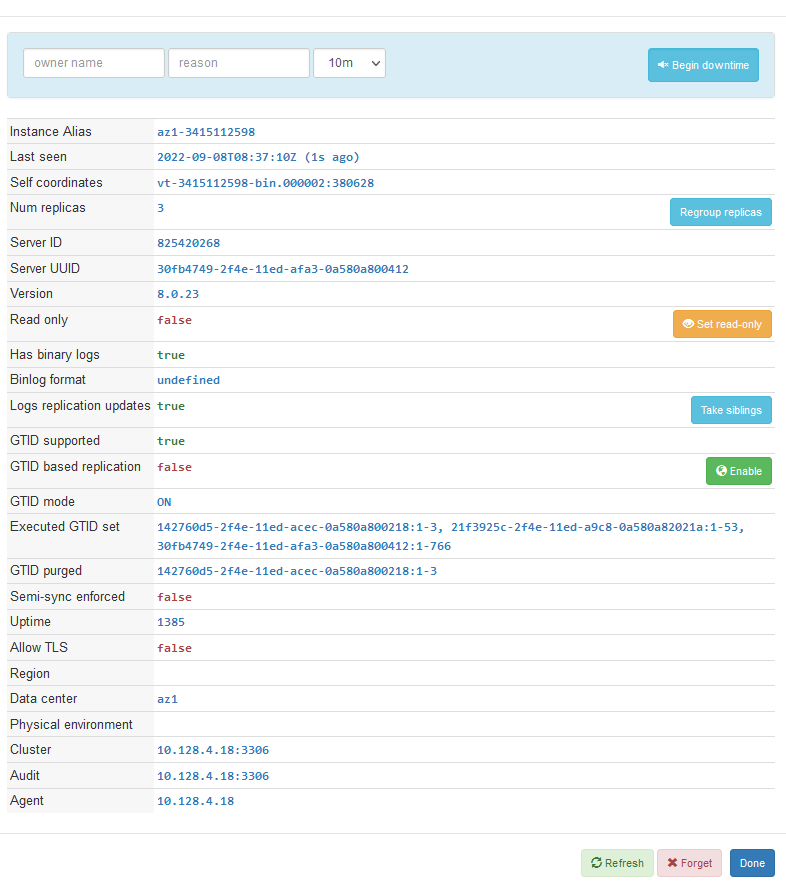

Primary şöyle

Burada Binlog format neden undefined bilmiyorum. Çünkü SQL ile kontrol edince çıktı şöyle. Yani ROW formatında Binlog olması lazım

> SELECT @@global.binlog_format;ROW> SHOW VARIABLES LIKE '%bin%';Variable_name Valuebind_address *binlog_cache_size 32768binlog_checksum CRC32binlog_direct_non_transactional_updates OFFbinlog_encryption OFFbinlog_error_action ABORT_SERVERbinlog_expire_logs_seconds 259200binlog_format ROWbinlog_group_commit_sync_delay 0binlog_group_commit_sync_no_delay_count 0binlog_gtid_simple_recovery ONbinlog_max_flush_queue_time 0binlog_order_commits ONbinlog_rotate_encryption_master_key_at_startup OFFbinlog_row_event_max_size 8192binlog_row_image FULLbinlog_row_metadata MINIMALbinlog_row_value_optionsbinlog_rows_query_log_events OFFbinlog_stmt_cache_size 32768binlog_transaction_compression OFFbinlog_transaction_compression_level_zstd 3binlog_transaction_dependency_history_size 25000binlog_transaction_dependency_tracking COMMIT_ORDERinnodb_api_enable_binlog OFFlog_bin ONlog_bin_basename /vt/vtdataroot/vt_1330809953/bin-logs/vt-1330809953-binlog_bin_index /vt/vtdataroot/vt_1330809953/bin-logs/vt-1330809953-bin.indexlog_bin_trust_function_creators OFFlog_bin_use_v1_row_events OFFlog_statements_unsafe_for_binlog ONmax_binlog_cache_size 18446744073709547520max_binlog_size 1073741824max_binlog_stmt_cache_size 18446744073709547520sql_log_bin ONsync_binlog 1

Zaten Vitess belgelerindeki açıklama da şöyle. Bu açıklamaları koyu renk ile işaretledim. Sanırım vtorc ekranları doğru göstermiyor.

When specifying additional configuration changes to Vitess, please keep in mind that changing the following settings is unsupported:...log-bin Several Vitess features rely on the binary log being enabled.binlog-format Vitess only supports row-based replication. Do not change this setting from the included configuration files.binlog-row-image Vitess only supports the default value (FULL)

GTID çıktıları da şöyle

> SHOW GLOBAL VARIABLES LIKE '%gtid%';binlog_gtid_simple_recovery ON enforce_gtid_consistency ON gtid_executed f5bc78a8-2516-11ed-8db6-0a580a830019:1-13035 gtid_executed_compression_period 0 gtid_mode ON gtid_owned gtid_purged f5bc78a8-2516-11ed-8db6-0a580a830019:1-12589 session_track_gtids off > SHOW VARIABLES LIKE '%gtid_next%'; gtid_next AUTOMATIC

MASTER durumu şöyle. Burada Binlog_Do_DB | Binlog_Ignore_DB |sütunlarını sildim

> SHOW MASTER STATUS; File Position Executed_Gtid_Set vt-1330809953-bin.000015 1909 f5bc78a8-2516-11ed-8db6-0a580a830019:1-13035

SHOW SLAVE STATUS boş satır döndürüyor

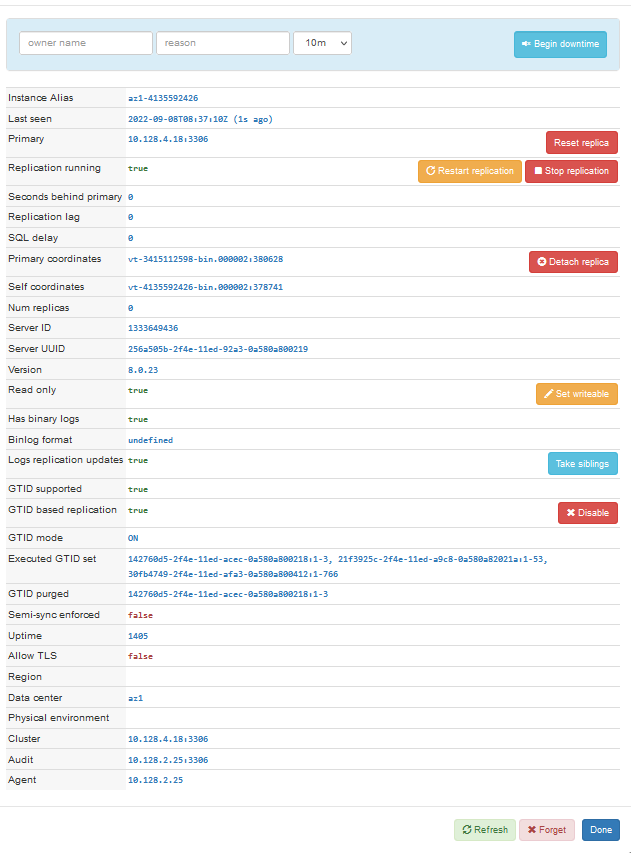

Replica şöyle

Sonra şöyle yaptım

vc PlannedReparentShard --keyspace_shard ADV/- --new_primary az1-1330809953

Komut hata verdi ama ekran da replica primary oldu.